TraceDiff: Debugging Unexpected Code Behavior Using Trace Divergences

Abstract

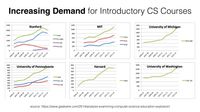

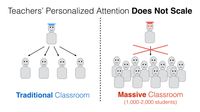

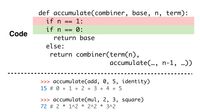

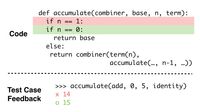

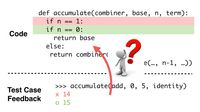

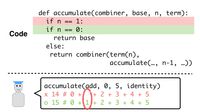

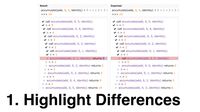

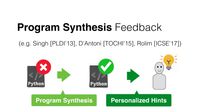

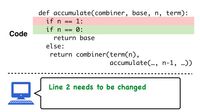

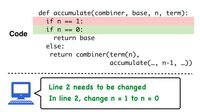

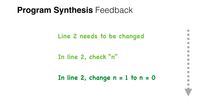

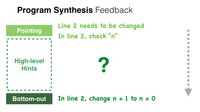

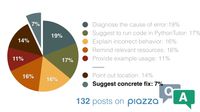

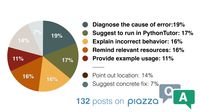

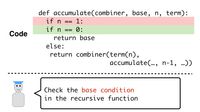

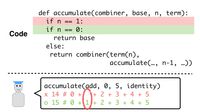

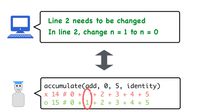

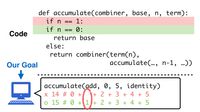

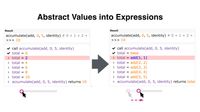

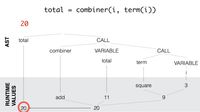

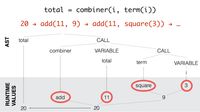

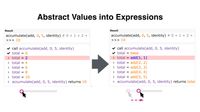

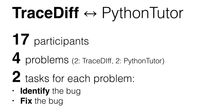

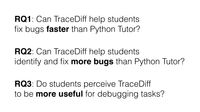

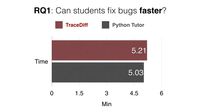

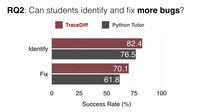

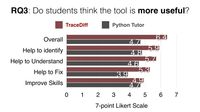

Recent advances in program synthesis offer means to automatically debug student submissions and generate personalized feedback in massive programming classrooms. When automatically generating feedback for programming assignments, a key challenge is designing pedagogically useful hints that are as effective as the manual feedback given by teachers. Through an analysis of teachers’ hint-giving practices in 132 online Q&A posts, we establish three design guidelines that an effective feedback design should follow. Based on these guidelines, we develop a feedback system that leverages both program synthesis and visualization techniques. Our system compares the dynamic code execution of both incorrect and fixed code and highlights how the error leads to a difference in behavior and where the incorrect code trace diverges from the expected solution. Results from our study suggest that our system enables students to detect and fix bugs that are not caught by students using another existing visual debugging tool.

Publication

Ryo Suzuki, Gustavo Soares, Andrew Head, Elena Glassman, Ruan Reis, Melina Mongiovi, Loris D’Antoni, and Bjöern Hartmann. 2017. TraceDiff: Debugging Unexpected Code Behavior Using Trace Divergences. In Proceedings of 2017 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC '17). IEEE Press, Piscataway, NJ, USA, 107-115.

DOI: https://doi.org/10.1109/VLHCC.2017.8103457

Ryo Suzuki, Gustavo Soares, Elena Glassman, Andrew Head, Loris D'Antoni, and Björn Hartmann. 2017. Exploring the Design Space of Automatically Synthesized Hints for Introductory Programming Assignments. In Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems (CHI EA '17). ACM, New York, NY, USA, 2951-2958.

DOI: https://doi.org/10.1145/3027063.3053187

Download PDF